Essay AI Literacy as a Democratic Skill: From Symbiosis to Civic Responsibility Part I

A forward-looking impact analysis of the AI era's impact on our society

By Richard P. Kindlman

A Manifesto – Daring to Think Bigger

In his book Our Final Invention, James Barrat presents a critical observation that many overlook: the misconception that computers process information in the same way humans do—“…unless there is something mystical or magical about human thinking.”

The core postulate in all my writing is this:

Human thinking is not merely a process—it is a mystical and genuinely magical phenomenon.

It is the unique ability to create new knowledge from nothing. This capacity is what distinguishes us from artificial intelligence. While AI remains bounded by its programming and data, humans are capable of abstraction, creation, and reinvention.

This essay is built upon that belief and is an invitation to explore the frontier of AI literacy as a civic imperative. My aim is to help you see that your mind is infinite—and to guide you in nurturing it to bear meaningful fruit.

1. Introduction

Artificial Intelligence (AI) and intelligent machines are transforming education and care. But if they are to fulfill their revolutionary promise, their purpose must extend beyond technical efficiency. The future of AI lies not in humanoid faces or mechanical hands, but in environments that listen and respond—systems that adapt to our rhythms, protect our dignity, and support our autonomy.

This essay frames AI literacy as a civic skill essential for healthy democracies. AI literacy is the ability to question, co-design, and responsibly use intelligent systems so that technology becomes an extension of human freedom—not a replacement for it. AI literacy means the capacity to interrogate, co‑design, and responsibly use intelligent systems—so technology serves autonomy, dignity, and justice.

This is a paid essay, available for a one-time payment of 10 EUR.

👉 [Click here to purchase and unlock the full text]

https://www.kindlman.blog/essay-ai-literacy-as-a-democratic-skill-from-symbiosis-to-civic-responsibility-part-i/

2. Symbiotic Intelligence: Lessons from the Forest

In nature, mycorrhizal fungi create vast underground networks connecting trees and plants, enabling the sharing of nutrients and signals—this is the “Wood Wide Web.” These ecosystems demonstrate how interdependence, not isolation, fosters resilience.

Similarly, symbiotic AI should act as connective intelligence—amplifying human capabilities through mutual reinforcement:

Humans contribute creativity, judgment, and civic responsibility

Biotic machine-organisms (e.g., body-embedded sensors) collect biological signals

Abiotic machine-beings (e.g., environmental or cloud systems) mediate communication across the partnership.

Together, these entities form a symbiotic trinity that supports care, learning, and autonomy.

In one blog, I described how this model would look in practice:

“After work, the human returns home with their biotic machine-organism. The abiotic machine-being, meanwhile, heads to a local cultural center for updates, maintenance, and social interaction. This ensures cohesion in the collective: human, biotic machine, and abiotic partner.”

To preserve this balance, each symbiotic unit must consist of a specific human and their two machine partners. This collective not only produces value but also relaxes, learns, and grows together—forming a culture of co-evolution.

This model raises provocative questions about legal personhood for machines and security—what protections should these centers have if machine-beings become corporate assets? These are not distant hypotheticals—they are ethical imperatives.

3. Why AI Literacy is a Democratic Skill

AI literacy is civic as much as it is technical. Citizens must be equipped to question the systems that shape education, healthcare, and public life.

Sundar Pichai reminds us that “the future of AI is about augmenting—not replacing—human capabilities.” Similarly, Andrej Karpathy famously noted, “The hottest programming language is English”—emphasizing how communication with AI is now a fundamental skill.

The democratic challenge is ensuring these systems strengthen autonomy and justice, not reinforce surveillance or passivity.

4. Pedagogical Models for AI Ethics

Ethical AI education must be participatory and grounded in real-world practice. When students interact with intelligent machines—not just theoretically, but as partners—they learn: - To reflect - To iterate - To co-create knowledge

Case Study A: Jill Watson at Georgia Tech

In 2016, Georgia Tech introduced ‘Jill Watson’, a virtual teaching assistant initially built on IBM Watson, trained an AI teaching assistant trained on tens of thousands of forum posts. Jill answered routine student questions, freeing human TAs for higher-order dialogue.

Value: Attention is redistributed—machines handle repetition, humans focus on mentorship.

Case Study B: Student-Designed Health Chatbots (Hoover City Schools)

Students designed a chatbot to teach cardiovascular health. They learned not just content, but how to address data bias and ethical constraints. This is AI literacy in action.

5. Humanoid Robots vs. Intelligent Environments

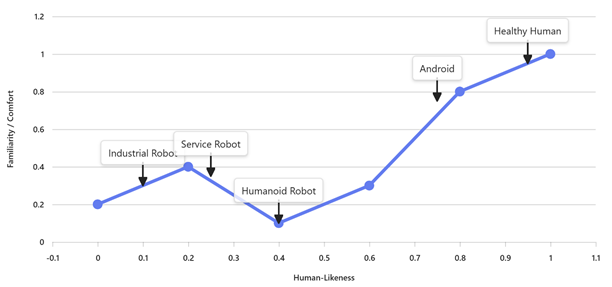

Design determines trust. Studies show that ambient systems—those embedded invisibly into rooms and devices—promote dignity better than humanoid robots.

One reason for this lies in the uncanny valley effect, a well-documented psychological phenomenon. When robots or avatars resemble humans closely—but not perfectly—they evoke discomfort or even revulsion. Instead of empathy, users feel estrangement. This cognitive dissonance arises because the entity appears almost—but not quite—human, triggering our sensitivity to social cues and our detection of inauthenticity.

In environments such as classrooms or elder care, this effect can undermine trust and emotional safety. Intelligent environments bypass this by embedding technology in walls, furniture, and everyday tools—creating seamless, non-threatening interfaces.

Figure: The Uncanny Valley Effect

Human-likeness vs emotional response. Designs that are “almost human” tend to evoke discomfort—highlighting the value of ambient, non-anthropomorphic systems.

Industrial Robot (far left): Clearly mechanical, low human-likeness, moderate comfort.

Service Robot (mid-left): Functional design, some human-like features, still comfortable.

Humanoid Robot (in the valley): Near-human appearance but imperfect, causing discomfort.

Android (near the peak): Almost indistinguishable from human, comfort rises again.

Healthy Human (far right): Full human-likeness, maximum comfort.

Case Study C: Ambient Intelligence (Stanford)

Sensor-rich hospital rooms monitor hygiene, falls, and vital signs without intruding. These “intelligent spaces” reduce harm and maintain privacy.

Concept Extension: Intelligent Bathing

In elder care, an intelligent bathtub could surround the user, accurately controlling water temperature, posture support, washing and drying cycles, while monitoring safety signals (e.g., dizziness). An intelligent machine becomes part of the caring environment, enabling independence with dignity.

6. Dignity in Elder Care and Education

Dignity is not emotional, mimicry, it is about support that respects autonomy.

Case Study D: Nobi Smart Lamps (UK)

In UK care homes, AI‑enabled lamps detected all falls during pilots, cut response times dramatically (to around two minutes), and reported up to 84% fewer falls compared to baseline in one site. While results come from vendor‑linked and partner case studies, they illustrate how ambient, privacy‑respecting devices can enhance safety without imposing a humanoid presence, blended into rooms, preserving trust and privacy.

Intelligent environments support dignity by staying invisible until needed.

7. Privacy and Surveillance

Respectful design starts with transparency, consent, and data minimization. The EU’s AI Act (Art. 50) requires disclosure when people interact with AI systems and mandates labeling of synthetic content (e.g., deepfakes). OECD guidance on data access and sharing emphasizes FAIR data principles and privacy‑enhancing technologies as foundations for trustworthy AI.

If AI feels like surveillance, it fails. Symbiosis succeeds by invitation, not intrusion.

8. Conclusion

AI literacy is a democratic skill. It is the competence to co-create with machines, to defend dignity, and to govern complexity with clarity.

The future is not automation—it is augmentation. When humans, biotic machines, and ambient abiotic systems collaborate in ethical ways, care and learning become more relational, more reflective, and more human.

Symbiosis is already here—it’s just hard to see.

9. Case Study Infoboxes

Infobox 1: Georgia Tech – Jill Watson

Students: ~300 per semester; AI trained on ~40,000 forum posts

Outcome: Freed human TAs to mentor; gradual AI rollout ensured quality control

Sources: OECD, ACM Digital Library

Infobox 2: Ambient Intelligence (Stanford)

System: AI room sensors in hospitals and homes

Function: Detect hygiene lapses, falls, anomalies

Governance: Transparent data use and privacy protocols

Sources: Stanford Engineering, Georgia Tech PE

Infobox 3: Nobi Smart Lamps

Outcomes: 84% fewer falls, 100% detection accuracy

Rollout: 50 care homes, 500 rooms

Note: Vendor data—requires independent validation

Sources: Nobi, ScienceDaily, Digital Care Hub

References (APA Style)

Barrat, J. (2013). Our Final Invention: Artificial Intelligence and the End of the Human Era. Thomas Dunne Books.

European Union. (2024). Artificial Intelligence Act. https://artificialintelligenceact.eu/

Georgia Institute of Technology. (n.d.). Georgia Tech News Center. https://news.gatech.edu/

Georgia Tech Professional Education. (n.d.). GTPE Blog. https://pe.gatech.edu/blog

International Society for Technology in Education. (n.d.). ISTE Case Study. https://www.iste.org/resources/case-studies

Nobi. (n.d.). Retrieved from https://nobi.life/

Organisation for Economic Co-operation and Development. (2024). AI Policy Briefs. https://www.oecd.org/ai/

ScienceDaily. (n.d.). https://www.sciencedaily.com/

Stanford University. (n.d.). Stanford Engineering. https://engineering.stanford.edu/

Stanford University. (n.d.). Stanford Engineering. https://engineering.stanford.edu/ScienceDaily. (n.d.). https://www.sciencedaily.com/Organisation for Economic Co-operation and Development. (2024). AI Policy Briefs. https://www.oecd.org/ai/Nobi. (n.d.). Retrieved from https://nobi.life/International Society for Technology in Education. (n.d.). ISTE Case Study. https://www.iste.org/resources/case-studiesGeorgia Tech Professional Education. (n.d.). GTPE Blog. https://pe.gatech.edu/blogGeorgia Institute of Technology. (n.d.). Georgia Tech News Center. https://news.gatech.edu/European Union. (2024). Artificial Intelligence Act. https://artificialintelligenceact.eu/Barrat, J. (2013). Our Final Invention: Artificial Intelligence and the End of the Human Era. Thomas Dunne Books.References (APA Style)________________________________________Sources: Nobi, ScienceDaily, Digital Care HubNote: Vendor data—requires independent validationRollout: 50 care homes, 500 roomsOutcomes: 84% fewer falls, 100% detection accuracyInfobox 3: Nobi Smart LampsSources: Stanford Engineering, Georgia Tech PEGovernance: Transparent data use and privacy protocolsFunction: Detect hygiene lapses, falls, anomaliesSystem: AI room sensors in hospitals and homesInfobox 2: Ambient Intelligence (Stanford)Sources: OECD, ACM Digital LibraryOutcome: Freed human TAs to mentor; gradual AI rollout ensured quality controlStudents: ~300 per semester; AI trained on ~40,000 forum postsInfobox 1: Georgia Tech – Jill Watson9. Case Study Infoboxes________________________________________Symbiosis is already here—it’s just hard to see.The future is not automation—it is augmentation. When humans, biotic machines, and ambient abiotic systems collaborate in ethical ways, care and learning become more relational, more reflective, and more human.AI literacy is a democratic skill. It is the competence to co-create with machines, to defend dignity, and to govern complexity with clarity.8. Conclusion________________________________________If AI feels like surveillance, it fails. Symbiosis succeeds by invitation, not intrusion.Respectful design starts with transparency, consent, and data minimization. The EU’s AI Act (Art. 50) requires disclosure when people interact with AI systems and mandates labeling of synthetic content (e.g., deepfakes). OECD guidance on data access and sharing emphasizes FAIR data principles and privacy enhancing technologies as foundations for trustworthy AI.7. Privacy and Surveillance________________________________________Intelligent environments support dignity by staying invisible until needed.In UK care homes, AI enabled lamps detected all falls during pilots, cut response times dramatically (to around two minutes), and reported up to 84% fewer falls compared to baseline in one site. While results come from vendor linked and partner case studies, they illustrate how ambient, privacy respecting devices can enhance safety without imposing a humanoid presence, blended into rooms, preserving trust and privacy.Case Study D: Nobi Smart Lamps (UK)Dignity is not emotional, mimicry, it is about support that respects autonomy.6. Dignity in Elder Care and EducationIn elder care, an intelligent bathtub could surround the user, accurately controlling water temperature, posture support, washing and drying cycles, while monitoring safety signals (e.g., dizziness). An intelligent machine becomes part of the caring environment, enabling independence with dignity.Concept Extension: Intelligent BathingSensor-rich hospital rooms monitor hygiene, falls, and vital signs without intruding. These “intelligent spaces” reduce harm and maintain privacy.Case Study C: Ambient Intelligence (Stanford)• Healthy Human (far right): Full human-likeness, maximum comfort.• Android (near the peak): Almost indistinguishable from human, comfort rises again. • Humanoid Robot (in the valley): Near-human appearance but imperfect, causing discomfort. • Service Robot (mid-left): Functional design, some human-like features, still comfortable. • Industrial Robot (far left): Clearly mechanical, low human-likeness, moderate comfort. Human-likeness vs emotional response. Designs that are “almost human” tend to evoke discomfort—highlighting the value of ambient, non-anthropomorphic systems.Figure: The Uncanny Valley EffectIn environments such as classrooms or elder care, this effect can undermine trust and emotional safety. Intelligent environments bypass this by embedding technology in walls, furniture, and everyday tools—creating seamless, non-threatening interfaces.One reason for this lies in the uncanny valley effect, a well-documented psychological phenomenon. When robots or avatars resemble humans closely—but not perfectly—they evoke discomfort or even revulsion. Instead of empathy, users feel estrangement. This cognitive dissonance arises because the entity appears almost—but not quite—human, triggering our sensitivity to social cues and our detection of inauthenticity.Design determines trust. Studies show that ambient systems—those embedded invisibly into rooms and devices—promote dignity better than humanoid robots.5. Humanoid Robots vs. Intelligent Environments________________________________________Students designed a chatbot to teach cardiovascular health. They learned not just content, but how to address data bias and ethical constraints. This is AI literacy in action.Case Study B: Student-Designed Health Chatbots (Hoover City Schools)Value: Attention is redistributed—machines handle repetition, humans focus on mentorship.In 2016, Georgia Tech introduced ‘Jill Watson’, a virtual teaching assistant initially built on IBM Watson, trained an AI teaching assistant trained on tens of thousands of forum posts. Jill answered routine student questions, freeing human TAs for higher-order dialogue. Case Study A: Jill Watson at Georgia TechEthical AI education must be participatory and grounded in real-world practice. When students interact with intelligent machines—not just theoretically, but as partners—they learn: - To reflect - To iterate - To co-create knowledge4. Pedagogical Models for AI Ethics________________________________________The democratic challenge is ensuring these systems strengthen autonomy and justice, not reinforce surveillance or passivity.Sundar Pichai reminds us that “the future of AI is about augmenting—not replacing—human capabilities.” Similarly, Andrej Karpathy famously noted, “The hottest programming language is English”—emphasizing how communication with AI is now a fundamental skill.AI literacy is civic as much as it is technical. Citizens must be equipped to question the systems that shape education, healthcare, and public life.3. Why AI Literacy is a Democratic Skill________________________________________This model raises provocative questions about legal personhood for machines and security—what protections should these centers have if machine-beings become corporate assets? These are not distant hypotheticals—they are ethical imperatives.To preserve this balance, each symbiotic unit must consist of a specific human and their two machine partners. This collective not only produces value but also relaxes, learns, and grows together—forming a culture of co-evolution.“After work, the human returns home with their biotic machine-organism. The abiotic machine-being, meanwhile, heads to a local cultural center for updates, maintenance, and social interaction. This ensures cohesion in the collective: human, biotic machine, and abiotic partner.”In one blog, I described how this model would look in practice: Together, these entities form a symbiotic trinity that supports care, learning, and autonomy.Abiotic machine-beings (e.g., environmental or cloud systems) mediate communication across the partnership.Biotic machine-organisms (e.g., body-embedded sensors) collect biological signalsHumans contribute creativity, judgment, and civic responsibilitySimilarly, symbiotic AI should act as connective intelligence—amplifying human capabilities through mutual reinforcement:In nature, mycorrhizal fungi create vast underground networks connecting trees and plants, enabling the sharing of nutrients and signals—this is the “Wood Wide Web.” These ecosystems demonstrate how interdependence, not isolation, fosters resilience.2. Symbiotic Intelligence: Lessons from the Forest